👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

✉️ In Today’s Newsletter

In todays deep dive, I break down everything about what MLOps means for DevOps engineers. It covers,

What is MLOPS

Skills Required For DevOps Engineers

Key MLOPS Tools

Do you need GPU-based clusters to learn MLOps?

So if you are ready to go “all in” with MLOPS, this edition is for you.

🧰 Remote Job Opportunities

Care Access - DevOps Engineer

Rackspace Technology - AWS DevOps Engineer III

CUCULUS - Senior DevOps Engineer

BlueMatrix - DevOps Engineer

Velotio Technologies - DevOps Engineer

Opsly - DevOps Engineer

Uplers - Data Ops ML Engineer

🔥 30% OFF Linux Foundation Sale

Use code DCUBE30 at kube.promo/devops

Or save more than 38% using the following bundles.

Master Kubernetes and crack CKA with my Kubernertes + CKA preparation course bundle. Deep-dive explanations, real-world examples, and rich illustrations- all in text format. No more wasting hours on videos. Learn faster, revisit concepts instantly

I have been talking about MLOPS for quite some years with the DevOps community and never got a chance to actually publish a detailed content..

With this edition, I am trying to fill that gap by shedding light on what MLOps means for a DevOps engineer and how they can approach learning to get opportunities to work on MLOps-related projects.

DevOps to MLOps

First of all, there is no such thing as switching careers from DevOps to MLOps. It is not a Career Switch. MLOps is an extension of DevOps, not a separate career path.

Anyone who says otherwise likely does not fully understand how MLOps works.

Because DevOps engineers are already part of an ML project, which includes other teams like Data Science, Data Engineers, ML Developers, etc.

Also, you will be doing similar DevOps work, like setting up CI/CD, writing infrastructure as code, Python scripts, designing pipelines to orchestrate workflows, etc.

The main difference is in the tools, platforms, technologies, workflows, and how ML pipelines are built and managed.

In short, you can say you are a DevOps engineer with expertise in managing Machine Learning operations. Because, at the core, you will still be following DevOps principles, which focus on collaborating with different teams efficiently.

So what is MLOPS really?

MLOps (Machine Learning Operations) is a practice followed to streamline and automate the lifecycle of machine learning models, from development to deployment and maintenance.

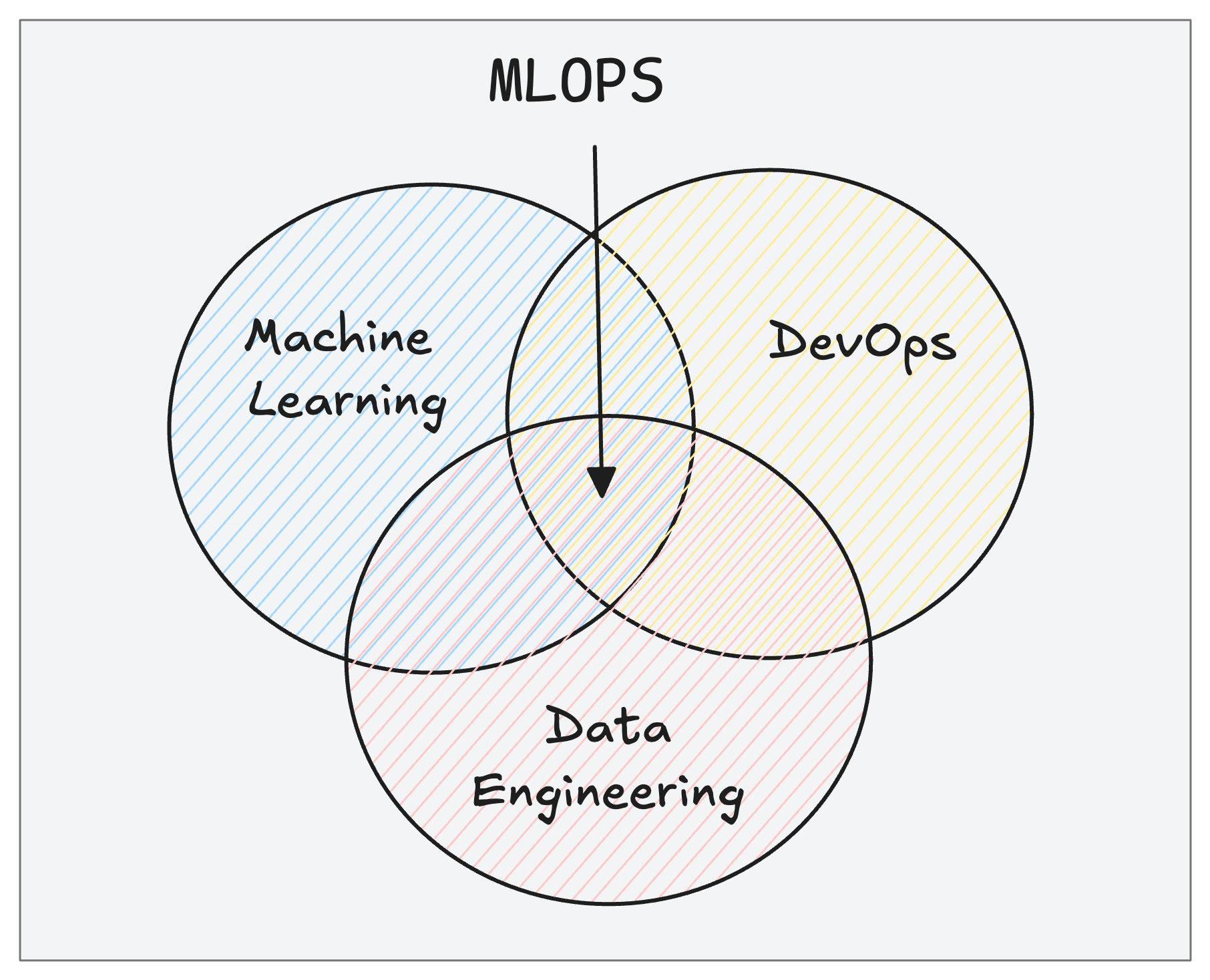

MLOPS = DevOps + Machine learning + Data Engineering

If you follow DevOps culture and practices for ML projects, you can call it MLOPS

Now the key question

How is MLOPS different from usual DevOps workflows?

MLOps is different from the usual DevOps workflows, just like machine learning is different from traditional software development.

For example,

In MLOps, you need to track three components: code, data, and models (while in traditional DevOps, you only track code).

Additionally, MLOps introduces the following.

Data versioning: To track changes in datasets using tools like DVC (Data Version Control) or LakeFS

Experiment tracking: To log hyperparameters, metrics, and model versions for each training run

Model registry: To version and manage models separately from application artifacts

Continuous Training (CT): Beyond CI/CD, models need regular retraining as data changes

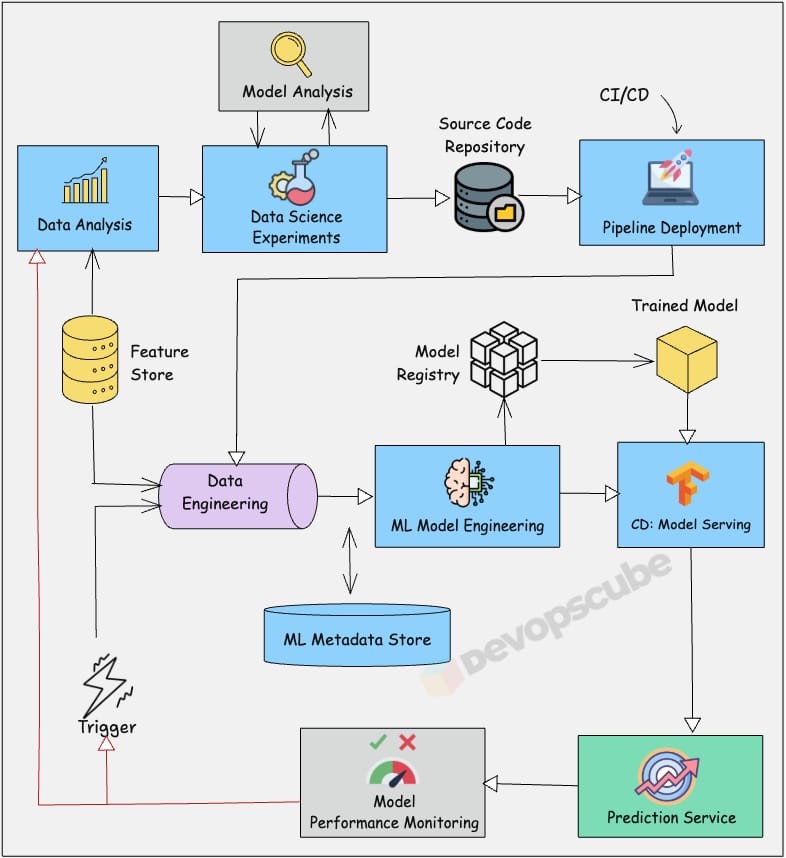

The following image illustrates the high level MLOPS workflow on how a machine learning model is built, deployed, and monitored in production.

Skills Required For DevOps Engineers

DevOps engineers do not need to know how to build ML models or understand complex ML algorithms.

But for a DevOps engineer to efficiently collaborate, build, and maintain ML infrastructure, they should have an understanding of the following:

Understanding basic ML terminology and concepts: For example, ML model training, feature engineering, inference, hyperparameters,what model artifacts are, etc.

Understanding of ML Workflow : How ML experiments work, why models need retraining, what model drift means, etc.

ML Infrastructure Requirements: Computing resource needs for training, storage requirements for datasets, model serving patterns, GPU vs CPU requirements.

ML-specific monitoring: Understanding data drift, model performance degradation, prediction latency, and feature distribution changes (different from traditional application monitoring).

Once you have a good understanding of the above-mentioned ML basics, you will be able to have effective conversations with data scientists, design appropriate infrastructure, set up proper monitoring, developer-centric workflows and CI/CD pipelines, just as you would in a typical DevOps project.

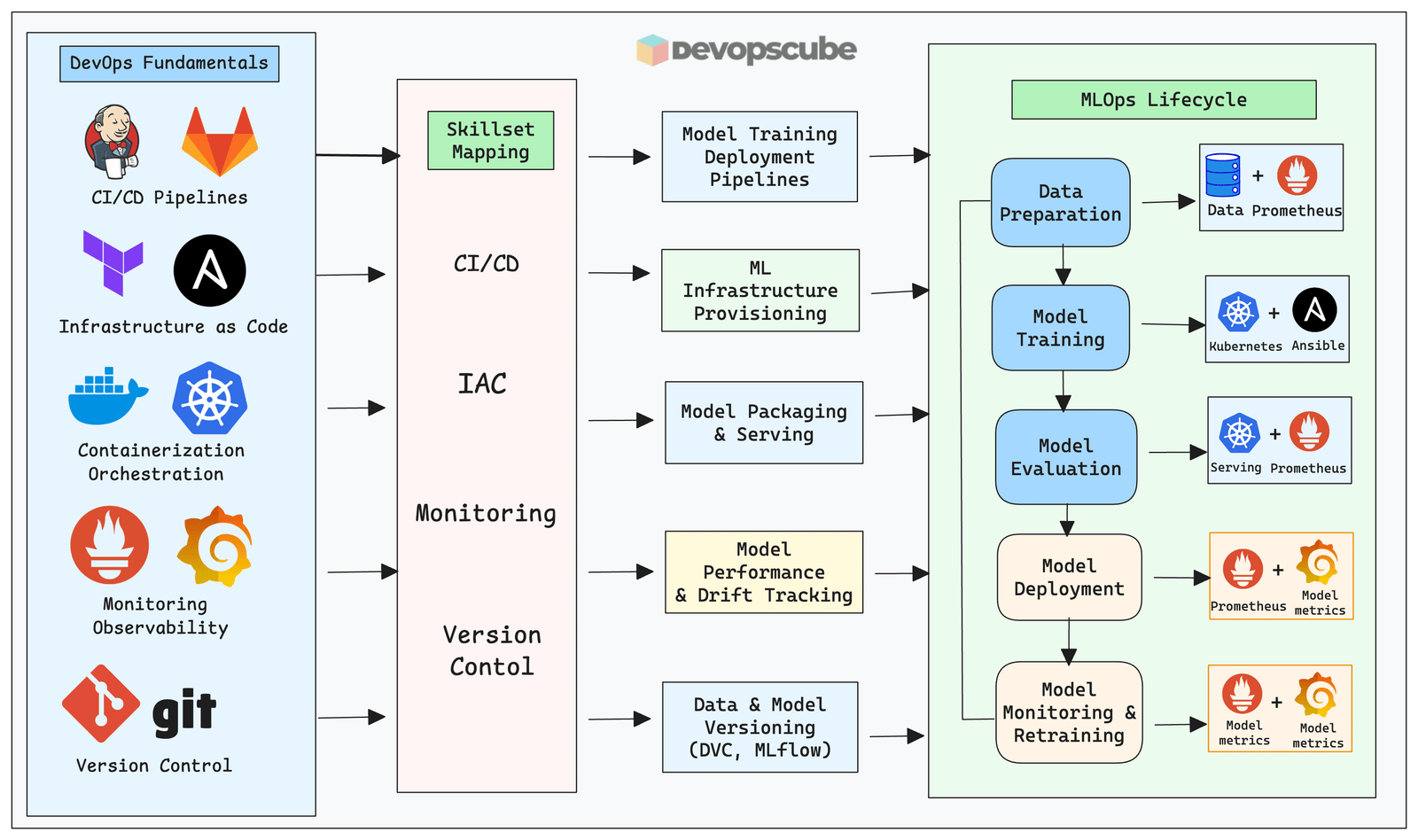

The following image illustrates the skill mapping from DevOps to MLOps.

Next step would be Large Language models (LLMs). Many ML systems today integrate LLMs into their workflows.

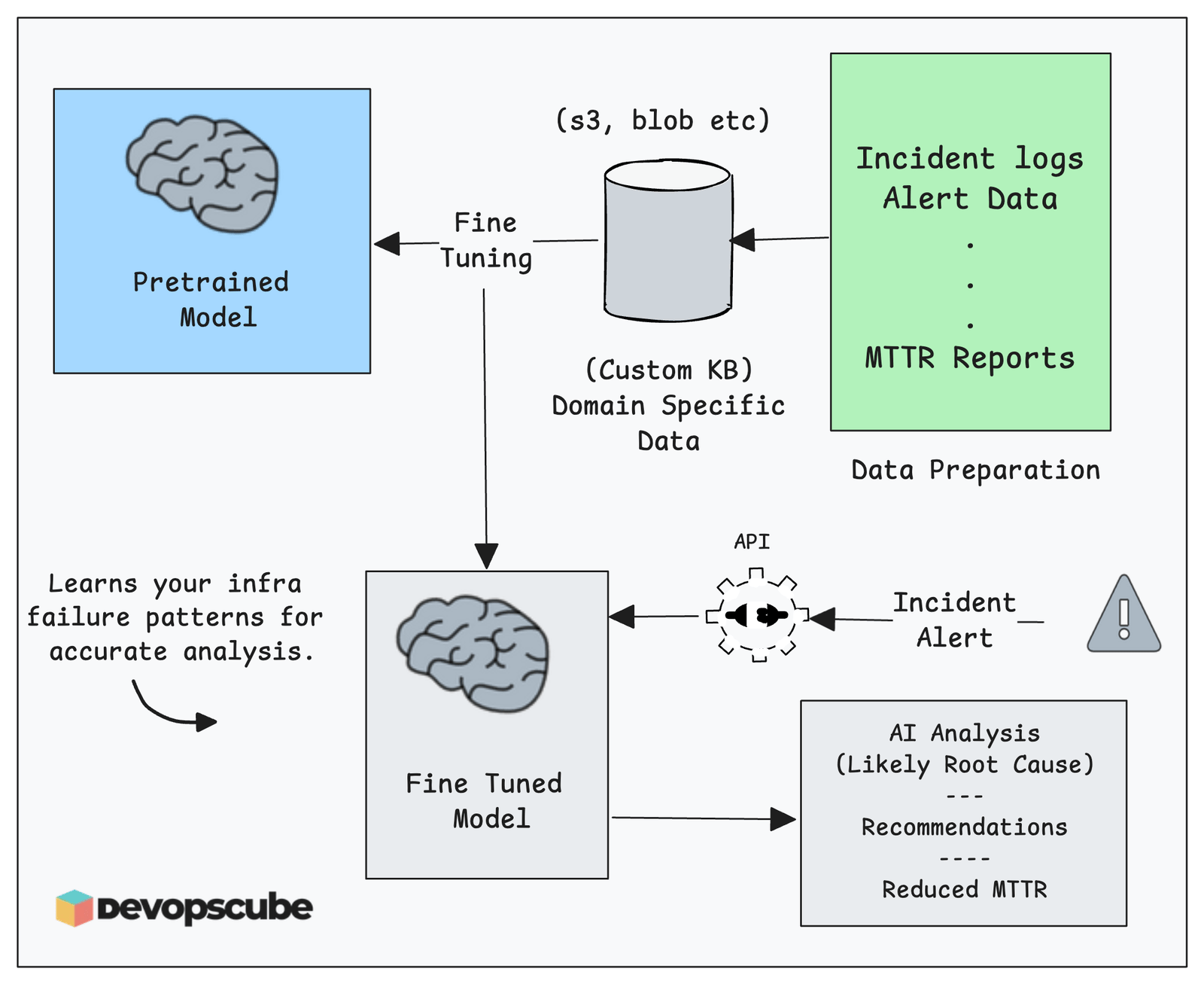

For example, DevOps/SRE team use LLMs to read logs, analyze metrics, and suggest what might be wrong with a system integrating with observability tools.

The following image illustrates an example root cause analysis using fine tuned LLMs.

From a LLM perspective, you need to learn the following.

Working with managed LLM APIs (OpenAI, Anthropic, Gemini)

Self-hosted models (LLaMA, Mistral, Mixtral) - For in house enterprise use cases.

Fine-tuning LLMs for specific use cases.

Serving large models efficiently (vLLM, TensorRT-LLM)

Managing Kubernetes GPU clusters and costs

API gateway patterns for LLM applications

Monitoring token usage, latency, and costs

Retrieval Augmented Generation (RAG)

Another trend I am seeing is the rise of AI Agent workflows in DevOps/SRE. Companies are working on autonomous systems that can perform tasks, use tools, and make decisions.

As a DevOps engineer to work on these systems, you will need to understand the following.

Agentic workflows and orchestration

Tool integration patterns

State management for agents

Cost and safety guardrails

and more..

The list is not limited to the above. There is more. But you get the gist.

MLOPS Tools

As a DevOps engineer, you are already familiar with the following tools.

Today, these are mandatory skills.

Docker

Kubernetes

IaC tools like Terraform

Experience with cloud platforms (AWS, GCP, Azure)

CI/CD tools like Jenkins, GitHub Actions, etc.

Monitoring tools like Prometheus, Grafana, etc.

Python, FastAPI, etc.

The tools listed above are general DevOps tools and technologies.

In addition to these, for MLOps you also need to learn tools that work with data and AI/ML workflows (Enterprise-grade ML systems).

MLflow (Experiment tracking, model registry)

Kubeflow (End-to-end ML pipelines on Kubernetes)

Feature stores (Feast, Tecton etc to centralize feature management)

Vector databases (Pinecone, Weaviate for RAG and LLM applications)

Airflow (batch workflows)

KServe (model serving)

Evidently AI (ML observability & monitoring)

Following are some of the LLM specific tools.

vLLM, TensorRT-LLM (For high-performance LLM inference)

LangChain, LlamaIndex (LLM orchestration frameworks)

DVC - Data version control

Getting Started With MLOps

Whenever I talk about MLOps, the most common question I get is,

Do we need GPU-based clusters to learn MLOps?

The short answer is no - you do not need a GPU to learn MLOps.

For learning and testing, CPUs are more than enough to deploy sample models. You can start by building simple models like logistic regression or decision trees.

Once you have a model, you can practice the full MLOps lifecycle using tools like Kubeflow and MLflow which includes the following.

Packaging the model

Deploying the model

Monitoring model performance

Automating ML pipelines

To do this, you will still need a decent Kubernetes cluster with enough CPU and memory, because tools like Kubeflow and MLflow do consume a fair amount of resources. (e.g., a 3-node spot instance k8s cluster with 4 CPU cores and 8GB RAM)

This approach helps you learn the workflow without worrying about expensive hardware.

Running CPU-based experiments can cost $50-100/month on cloud platforms, while GPU instances can easily run $500+/month

Also, many modern MLOps setups are also moving toward foundation models and AI agents.

In these cases, you don't train models from scratch. Instead, you fine-tune or directly use existing models through APIs, such as OpenAI or similar providers.

This allows you to build and test real MLOps pipelines at a much lower cost, while focusing on what really matters like operationalizing models and AI workflows.

The next key question

When should one consider moving to GPU-based learning?

Once you understand the "plumbing" of MLOps such as how data flows through the pipeline, how models are versioned, and how deployments are monitored, you can start working with GPU-based setups, if you have access to them or can afford them.

At this point, you can learn how to set up GPU-based Kubernetes clusters and deploy workloads that need GPU access. This includes using Kubernetes device plugins and features like Dynamic Resource Allocation (DRA) to manage GPUs efficiently.

What’s Next?

As many of you may know, the MLOps space is growing very fast, with new open-source tools and constant improvements in ML models.

The good news is that if you spend some focused time, you can learn the core MLOps concepts without much difficulty.

Ready to get hands-on?

In the upcoming MLOps edition, I will share a detailed, step-by-step guide on building a machine learning model from scratch, so you can practice and understand core ML concepts.

✉️ AI Tool of the Week (Moltbot)

Moltbot is a new, open-source personal AI assistant that has gone very popular in tech communities and among developers in early 2026.

It’s an AI assistant you run on your own computer or server instead of relying on a big cloud service

Many developers and early tech users are excited because it bridges the gap between simple chatbots and real automation.

Warning: Because Moltbot can access files, messages, and apps on your device, security experts warn it can be risky if not set up carefully.