👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

✉️ In Today’s Newsletter

In todays deep dive, I break down how k8s works behind the scenes.

Core architecture of Kubernetes

Grasp the key underlying workflows of Kubernetes control plane components.

Also in this edition,

Remote/Hybrid DevOps Job Opportunities

Envoy Gateway controller

Dynatrace Intelligence for autonomous operations

AI & Platform engineering.

🤝 Sponsored by:

Cloudways: Cloudways Autonomous (by DigitalOcean) offers fully managed WordPress hosting on Kubernetes, with built-in scaling and high availability. Perfect for blogs and business websites.

🧱 How Kubernetes Actually Works

Understanding how Kubernetes works under the hood is essential for anyone working with it.

In most Kubernetes interviews, you will be asked about cluster architecture and how core components work together for scheduling, networking, and managing Pods. If you can explain these clearly, you will stand out from other candidates

Since I can't cover everything in a single edition, I will be sending this as a three-part series. I am sure you will learn something new even if you have been working with Kubernetes for a while.

Let's get started.

Kubernetes Architecture (High Level)

The following Kubernetes architecture diagram shows all the components of the Kubernetes cluster and how external systems connect to the Kubernetes cluster.

The first and foremost thing you should understand about Kubernetes is, that it is a distributed system. Meaning, it has multiple components spread across different servers over a network.

Control Plane

The control plane is responsible for container orchestration and maintaining the desired state of the cluster. It has the following components.

kube-apiserver

etcd

kube-scheduler

kube-controller-manager

cloud-controller-manager

Worker Node

The Worker nodes are responsible for running containerized applications. The worker Node has the following components.

kubelet

kube-proxy

Container runtime

Now, let’s take a deep dive into each control plane component (Part 1)

1. kube-apiserver

The kube-api server is the central hub of the Kubernetes cluster that exposes the Kubernetes API. It is highly scalable and can handle large number of concurrent requests.

End users, and other cluster components, talk to the cluster via the API server. Very rarely monitoring systems and third-party services may talk to API servers to interact with the cluster.

So when you use kubectl to manage the cluster, at the backend you are actually communicating with the API server through HTTP REST APIs over TLS.

Click to view in HD

Kubernetes api-server is responsible for the following.

API management: It exposes the cluster’s API endpoint and processes all API requests. The API is versioned and can support multiple versions at the same time.

Authentication (Using client certificates, bearer tokens, and HTTP Basic Authentication) and Authorization (ABAC and RBAC evaluation)

Processing API requests and validating data for the API objects like pods, services, etc. (Validation and Mutation Admission controllers)

api-server coordinates all the processes between the control plane and worker node components.

API server also contains an aggregation layer which allows you to extend Kubernetes API to create custom API resources.

The API server directly talks to etcd to store and retrieve cluster state. It’s the only main Kubernetes component that accesses etcd directly.

The API server also supports watching resources for changes. For example, clients can establish a watch on specific resources and receive real-time notifications when those resources are created, modified, or deleted.

Each component (Kubelet, scheduler, controllers) independently watches the API server to figure out what it needs to do. (Hub & Spoke model)

2. etcd

Kubernetes is a distributed system and it needs an efficient distributed database like etcd that supports its distributed nature. It acts as both a backend service discovery and a database. You can call it the brain of the Kubernetes cluster.

etcd is an open-source strongly consistent, distributed key-value store. So what does it mean?

Strongly consistent: If an update is made to a node, strong consistency will ensure it gets updated to all the other nodes in the cluster immediately.

Distributed: etcd is designed to run on multiple nodes as a cluster without sacrificing consistency.

Key Value Store: A nonrelational database that stores data as keys and values. It also exposes a key-value API. The datastore is built on top of BboltDB which is a fork of BoltDB.

etcd uses raft consensus algorithm for strong consistency and availability. It works in a leader-member fashion for high availability and to withstand node failures.

For durability, etcd uses WAL. Every write is first appended to the write-ahead log on disk before being applied to the in-memory store, ensuring durability and crash recovery

So, how does etcd work with Kubernetes?

To put it simply, when you use kubectl to get kubernetes object details, you are getting it from etcd. Also, when you deploy an object like a pod, an entry gets created in etcd.

In a nutshell, here is what you need to know about etcd.

etcd stores all configurations, states, and metadata of Kubernetes objects (pods, secrets, daemonsets, deployments, configmaps, statefulsets, etc).

etcdallows a client to subscribe to events usingWatch()API . Kubernetes api-server uses the etcd’s watch functionality to track the change in the state of an object.etcd exposes key-value API using gRPC. Also, the gRPC gateway is a RESTful proxy that translates all the HTTP API calls into gRPC messages. This makes it an ideal database for Kubernetes.

etcd stores all objects under the /registry directory key in key-value format. For example, information on a pod named Nginx in the default namespace can be found under /registry/pods/default/nginx.

Also, etcd it is the only Statefulset component in the control plane.

The number of nodes in an etcd cluster directly affects its fault tolerance. Here's how it breaks down:

3 nodes: Can tolerate 1 node failure (quorum = 2)

5 nodes: Can tolerate 2 node failures (quorum = 3)

7 nodes: Can tolerate 3 node failures (quorum = 4)

And so on. The general formula for the number of node failures a cluster can tolerate is:

fault tolerance = (n - 1) / 2Where n is the total number of nodes.

3. kube-scheduler

The kube-scheduler is responsible for scheduling Kubernetes pods on worker nodes.

When you deploy a pod, you specify the pod requirements such as CPU, memory, affinity, taints or tolerations, priority, persistent volumes (PV), etc. The scheduler's primary task is to identify the create request and choose the best node for a pod that satisfies the requirements.

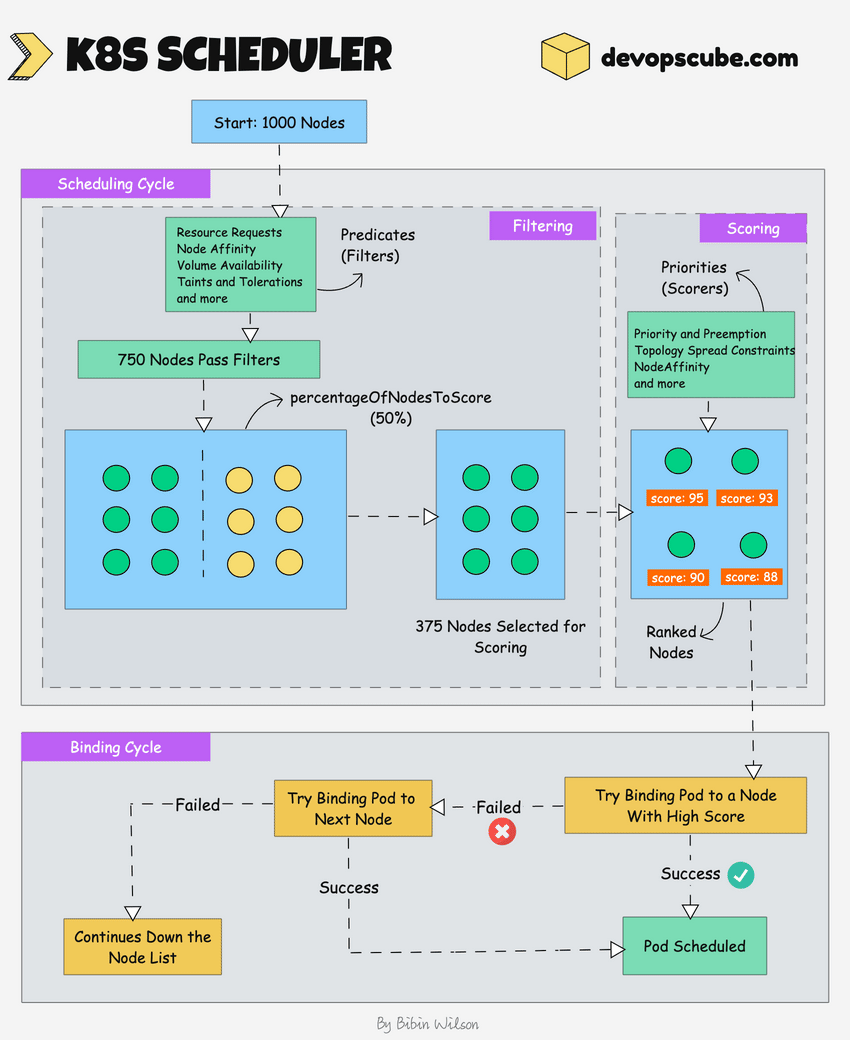

The following image shows a high-level overview of how the scheduler works.

In a Kubernetes cluster, there will be more than one worker node. So how does the scheduler select the node out of all worker nodes?

Here is how the scheduler works.

To choose the best node, the Kube-scheduler uses filtering and scoring operations.

Filtering

In filtering, the scheduler finds the best-suited nodes where the pod can be scheduled. For example, if there are five worker nodes with resource availability to run the pod, it selects all five nodes.

If there are no nodes, then the pod is unschedulable and moved to the scheduling queue. If It is a large cluster, let's say 100 worker nodes, and the scheduler doesn't iterate over all the nodes.

There is a scheduler configuration parameter called percentageOfNodesToScore. The default value is typically 50%. So it tries to iterate over 50% of nodes in a round-robin fashion. If the worker nodes are spread across multiple zones, then the scheduler iterates over nodes in different zones.

For very large clusters the default percentageOfNodesToScore is 5%.

Scoring

In the scoring phase, the scheduler ranks the nodes by assigning a score to the filtered worker nodes. The scheduler makes the scoring by calling multiple scheduling plugins.

Finally, the worker node with the highest rank will be selected for scheduling the pod. If all the nodes have the same rank, a node will be selected at random.

Once the node is selected, the scheduler creates a binding event in the API server. Meaning an event to bind a pod and node.

Here is what you need to know about a scheduler.

It is a controller that listens to pod creation events in the API server.

The scheduler has two phases. Scheduling cycle and the Binding cycle. Together it is called the scheduling context. The scheduling cycle selects a worker node and the binding cycle applies that change to the cluster.

The scheduler always places the high-priority pods ahead of the low-priority pods for scheduling. Also, in some cases, after the pod starts running in the selected node, the pod might get evicted or moved to other nodes. If you want to understand more, read the Kubernetes pod priority guide

You can create custom schedulers and run multiple schedulers in a cluster along with the native scheduler. When you deploy a pod you can specify the custom scheduler in the pod manifest. So the scheduling decisions will be taken based on the custom scheduler logic.

The scheduler has a pluggable scheduling framework. Meaning, that you can add your custom plugin to the scheduling workflow.

💡 For specialised hardware (like GPUs, FPGAs, smart NICs) the scheduler can make use of Dynamic Resource Allocation (DRA).

It is a feature in Kubernetes (stable since v1.34) that gives the scheduler real information about hardware devices in the cluster.

So if DRA is enabled, the scheduler can do hardware-aware scheduling for specialized devices that are particularly useful for AI/ML workloads.

4. Kube Controller Manager

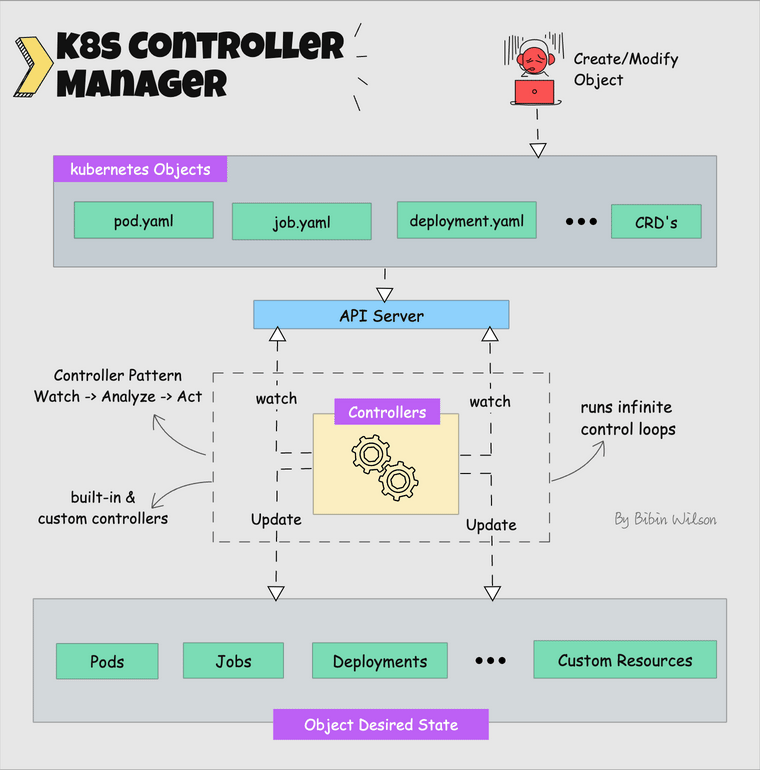

First lets understand what is a controller?

Controllers are programs that run infinite control loops. Meaning it runs continuously and watches the actual and desired state of objects. If there is a difference in the actual and desired state, it ensures that the kubernetes resource/object is in the desired state.

Let's say you want to create a deployment, you specify the desired state in the manifest YAML file (declarative approach). For example, 2 replicas, one volume mount, configmap, etc.

The in-built deployment controller ensures that the deployment is in the desired state all the time. If a user updates the deployment with 5 replicas, the deployment controller recognizes it and ensures the desired state is 5 replicas.

Kube controller manager is a component that manages all the Kubernetes controllers. Kubernetes resources/objects like pods, namespaces, jobs, replicaset are managed by respective controllers.

Here is what you should know about the Kube controller manager.

It manages all the controllers and the controllers try to keep the cluster in the desired state.

You can extend Kubernetes with custom controllers associated with a custom resource definition. (Operator Pattern)

5. Cloud Controller Manager (CCM)

When Kubernetes is deployed in cloud environments, the cloud controller manager acts as a bridge between Cloud Platform APIs and the Kubernetes cluster.

This way the core kubernetes core components can work independently and allow the cloud providers to integrate with kubernetes using plugins. (For example, an interface between kubernetes cluster and AWS cloud API)

Cloud controller integration allows Kubernetes cluster to provision cloud resources like instances (for nodes), Load Balancers (for services) etc.

Cloud Controller Manager contains a set of cloud platform-specific controllers that ensure the desired state of cloud-specific components (nodes, Loadbalancers, etc).

The Following are the three main controllers that are part of the cloud controller manager.

Node controller: This controller updates node-related information by talking to the cloud provider API. For example, node labeling & annotation, getting hostname, CPU & memory availability, nodes health, etc.

Route controller: It is responsible for configuring underlying networking routes on a cloud platform. So that pods on different nodes can talk to each other.

Service controller: It takes care of deploying load balancers, configure health checks, and update the Service status with the external IP.

The following is a classic examples of cloud controller manager.

Deploying Kubernetes Service of type Load balancer. Here Kubernetes provisions a Cloud-specific Loadbalancer and integrates with Kubernetes Service.

Overall Cloud Controller Manager manages the lifecycle of cloud-specific resources used by kubernetes.

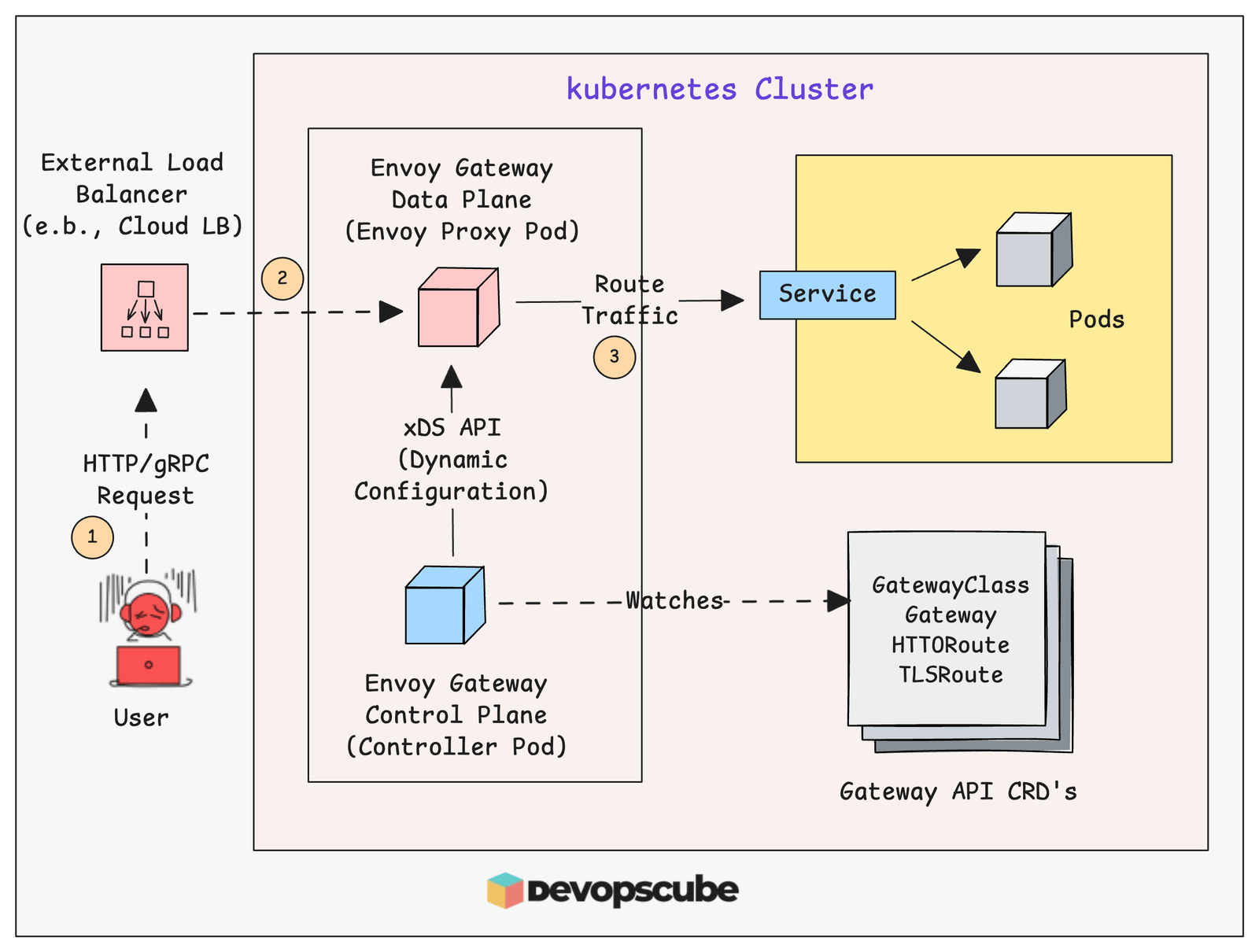

🧱 Envoy Gateway API Controller

Envoy Gateway is an open-source project that is designed to simplify the use of Envoy Proxy as an Gateway API Controller.

Here is an interesting thing about Envoy. Compared to other Gateway API controllers, Envoy Gateway stands out because it uses Envoy Proxy is built specifically for cloud-native environments.

Envoy uses a fully dynamic control plane called xDS (Discovery Service). This means configuration changes, such as adding or updating a route config, are applied instantly in memory. There is no need to restart or reload the proxy process.

💡 Envoy's xDS protocol has become the de facto standard for service mesh like Istio and cloud-native proxy configuration.

In simple terms, traffic rules can change in real time without causing downtime. This makes Envoy Gateway a strong fit for modern Kubernetes and microservices setups where changes happen often and need to be safe and fast.

🧰 Remote Job Opportunities

Nice - Specialist DevOps Engineer

Mobile Programming - DevOps Engineer (Azure)

Inflection.io - DevOps Engineer

Upwork - Senior Cloud & DevOps Architect

Shifttotech - AWS Cloud Architect/DevOps Engineer

Hyland - DevOps Engineer 2

📦 Keep Yourself Updated

Beyond automation With Agentic AI: Dynatrace has launched a new agentic AI system that goes beyond automation to detect and fix IT problems on its own, helping teams resolve issues faster and reduce manual work

AI and platform engineering: This article reviews a 2025 platform engineering webinar that highlights how AI shaped the field, the move from theory to practice, and key capabilities platform teams must build as they head into 2026