👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

Kubernetes has a powerful feature called the Aggregation Layer.

It helps you add custom APIs to your cluster. Meaning, you can create your own resource types and make Kubernetes do more than what it can do by default.

Why would you need a custom API?

Kubernetes gives you many built-in features like Pods, Deployments, Services, etc.

But sometimes, you might need to do something extra.. For example,

Serve metrics in a custom format

Expose your app’s own APIs to Kubernetes

With custom APIs, you can extend Kubernetes without changing its original code. That is where the Aggregation Layer comes in.

Popular tools like Metrics Server, Prometheus adapter use this method to plug into Kubernetes.

We will look at Metrics Server example in the upcoming section.

Extension API Server

To enable custom APIs, you need to build and deploy a extension API server.

Extension API Server = adds new APIs

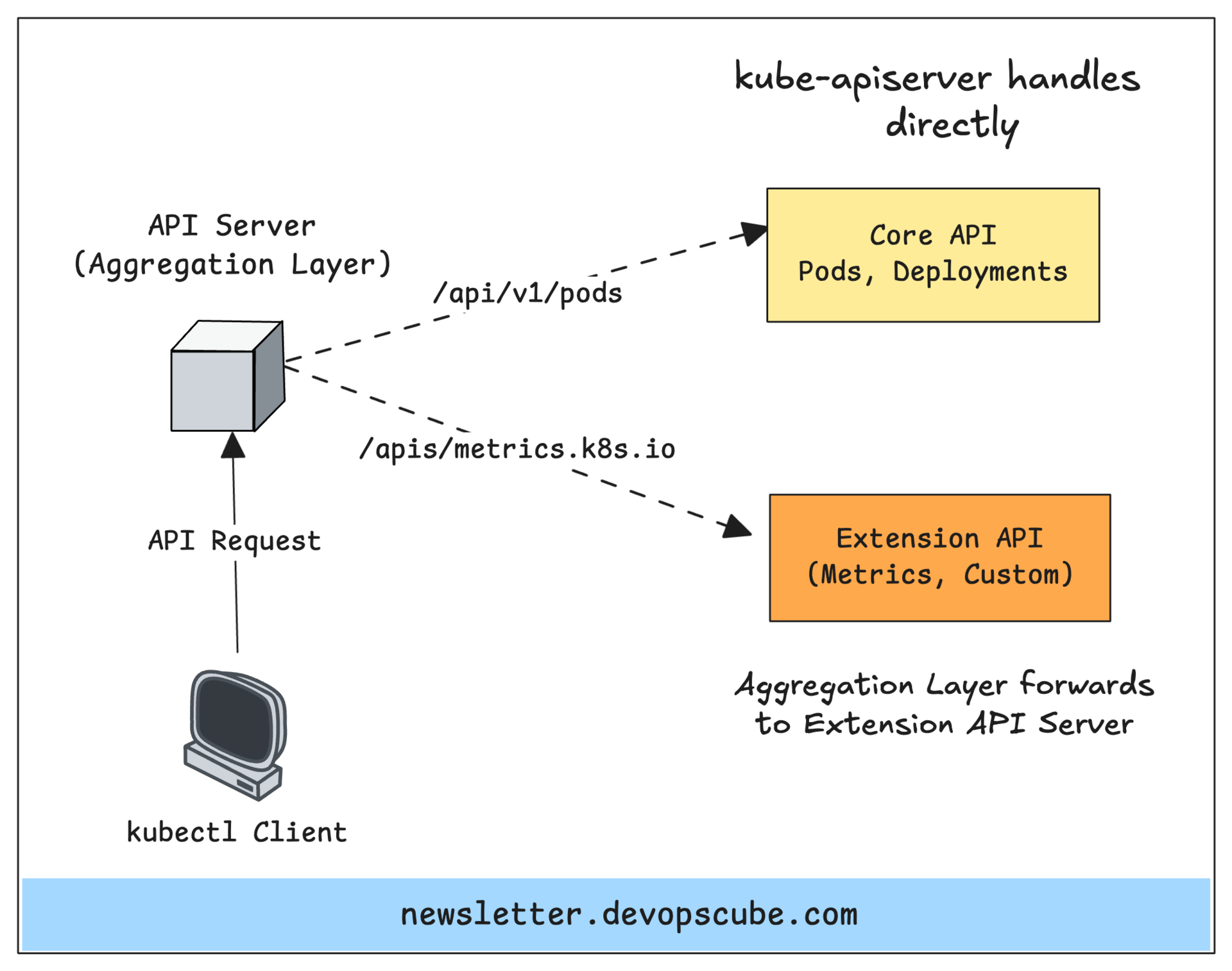

Logically, the aggregation layer sits in front of the main Kubernetes API server, routing requests to either the core API server or to extension API servers you have set up.

Physically it is part of the kube-api server binary.

Here is how it works.

The Kubernetes API server receives a request.

If the request is for a core resource like Pods/Deployments, it handles it itself.

If the request is for a custom resource API, it forwards the request to your Extension API Server.

The Extension API Server responds, and Kubernetes sends the response back to the user.

You will understand this even better when we walk through a real-world example in the next section.

Real World Example (Metrics Server)

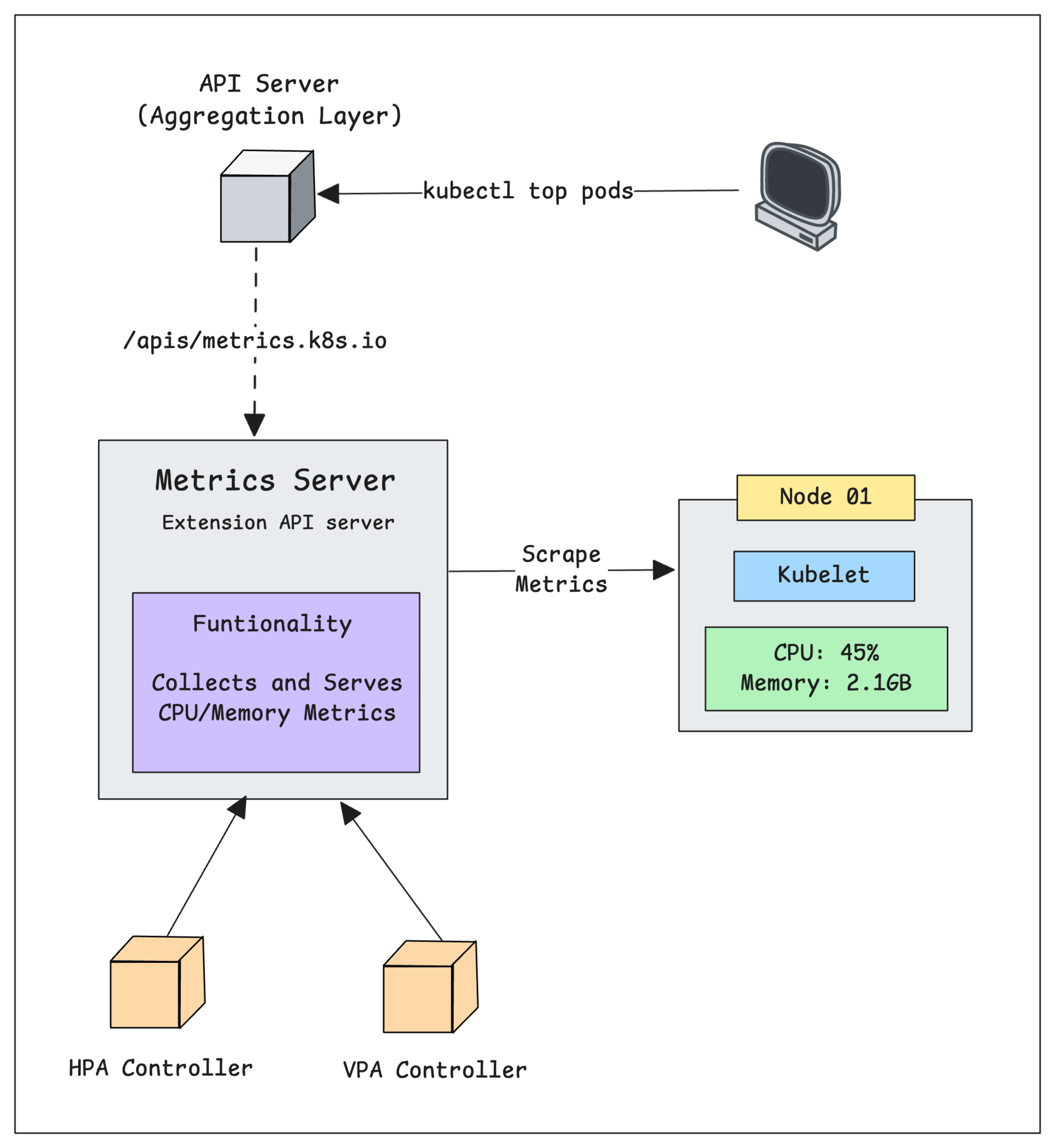

A classic example of using the Aggregation Layer in Kubernetes is the implementation of the Metrics Server.

Kubernetes itself doesnt store live CPU/Memory usage.

The Metrics Server is usually deployed as an add-on component in Kubernetes clusters. It provides the Metrics API (metrics.k8s.io), which Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler uses to scale pods automatically.

Metrics server implements the Metrics API as an add-on API server, meaning it extends the Kubernetes API using the Aggregation Layer we discussed earlier.

Here is how it works

The Metrics Server collects resource metrics from Kubelets on each node in your cluster.

It then serves these metrics via the Metrics API, which is exposed through Kubernetes API aggregation.

This allows the Kubernetes API server to serve these metrics alongside other core APIs.

You can view the API services in a cluster that has the Metrics Server deployed by running the following command:

$ kubectl get apiservices

v1.storage.k8s.io Local

v1beta1.metallb.io Local

v1beta1.metrics.k8s.io kube-system/metrics-server In the output,

Localmeans the API is served directly by the main Kubernetes API server.kube-system/metrics-serverindicates that this API is served by the metrics-server running in thekube-systemnamespace.

This means that even though the Metrics Server is a separate service, its API can be accessed as if it were a native part of the Kubernetes API.

Another example is Prometheus Adapter. It Exposes arbitrary Prometheus metrics to Kubernetes' custom metrics API.

It is used for autoscaling based on custom application metrics and more complex monitoring scenarios. Whereas metrics server provides only basic resource-based autoscaling. This API is served under the path /apis/custom.metrics.k8s.io/v1beta1.

Overall, here is how the request flow works.

When you run

kubectl get pods, the request goes to/api/v1/pods→ routed to Core API ServerWhen you run

kubectl top pods, the request goes to/apis/metrics.k8s.io/v1beta1/→ routed to Metrics ServerCustom metric queries go to

/apis/custom.metrics.k8s.io/→ routed to Prometheus Adapter

In short,

Core API Server: Comes by default with Kubernetes.

Extension API Server(s): Extra API servers you deploy. These are registered with the main API server.

From a users point of view, it still looks like one API. You run:

$ kubectl get pods

$ kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes"

Even though the second one is actually handled by the Metrics Server’s API server. The main API server just forwards your request to it.

Building Extension API Server

To build an extension API server, you can make use of the apiserver-builder repo.

apiserver-builder is a tool designed to simplify the process of creating Kubernetes extension API servers.

It automates much of the boilerplate code generation needed to create a Kubernetes API server. For example,

It generates API definitions

It creates controller scaffolding

It handles common tasks like setting up CRUD operations

Here is an example api-server implementation

When to use Aggregation Layer

In practice, it's common to start with CRDs and move to the Aggregation Layer if you encounter limitations.

For the majority of scenarios where you need to extend Kubernetes, such as defining application-specific resources or operational patterns, CRDs provide all the necessary functionality.

Many projects, including major Kubernetes extensions like Istio and Knative, use a combination of both approaches.