👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

✉️ In Today’s Newsletter

In todays deep dive, I break down everything about Kubernetes Node Feature Discovery.

What is NFD in Kubernetes

Key Use Cases

NFD Architecture

Hands on NFD

Label Filtering to avoid label explosion.

Also, learn how to analyze what’s bloating your Docker image.

🧰 Remote Job Opportunities

ST Engineering iDirect - DevOps Engineer

Akamai Technologies - Senior Site Reliability Engineer

Gainwell Technologies - DevOps Engineer(Terraform)

Ollion - Lead DevOps Engineer

Turing - MLOps Engineer

Scopic - MLOps Consultant

Canonical - Site Reliability Engineer - GitOps

Presidio - Cloud Migration Architect

🧑💻 For K8s Certification Aspirants

The Linux Foundation is running a 2-day flash sale on all its certification registrations!

Use code SPOOKY40CT at kube.promo/devops to get up to 47% off on CK{A,D,S} certifications.

You can find all bundle offers and discounts in this GitHub repository.

📚 k8s Node Feature Discovery

By default, every Kubernetes node comes with a few basic built-in labels that are automatically added by the kubelet.

For example,

kubernetes.io/hostname=<node-name>

kubernetes.io/os=linux

kubernetes.io/arch=amd64

If your cluster runs on a cloud provider like AWS EKS, you will also see extra labels that describe the cloud environment, such as:

topology.kubernetes.io/region=ap-south-1

topology.kubernetes.io/zone=ap-south-1a

node.kubernetes.io/instance-type=t3.medium

These labels are useful for scheduling, automation, and workload placement.

For instance, you can schedule a Pod to run only in a certain region or on a specific instance type.

However, notice that these default labels don’t include any details about the hardware, kernel, or device features of the node.

Thats where Node Feature Discovery (NFD) comes in.

What is Node Feature Discovery (NFD)?

Node Feature Discovery is a Kubernetes add-on that automatically detects and labels node-level features such as CPU flags, memory, kernel version, network adapters, and GPU capabilities.

If you have used Ansible facts before, NFD works in a similar way.

Just like Ansible gathers system information and provides it as metadata, NFD collects system details from Kubernetes nodes and exposes them as node labels.

🧱 Use Cases

Lets look at three key use cases for NFD so that you understand it better.

1. Detecting Nodes with Swap Enabled

One simple and practical use case of NFD is to automatically detect nodes with swap enabled.

In our Kubernetes Swap newsletter, I explained how NFD can label such nodes.

Once installed, NFD adds labels that indicate whether swap is turned on or off for each node.

This makes it easy to schedule or monitor Pods based on swap status.

2. Scheduling AI and GPU Workloads

Another common use case is AI and GPU workload scheduling.

NFD works together with the Kubernetes Device Plugin framework (for example, the NVIDIA device plugin) to Detect GPU presence and capabilities and ddd GPU-related labels to nodes

This makes it possible to schedule machine learning or deep learning workloads only on GPU-enabled nodes.

3. NUMA-Aware Scheduling and CPU Pinning

For high-performance or latency-sensitive applications, NFD provides the detailed hardware topology data needed for the following.

NUMA-aware scheduling, where Pods are placed close to the memory and devices they use. (Typically used in AI/ML inference, Databases etc)

CPU pinning, where specific CPU cores are dedicated to certain workloads (Used in Real-time applications like VoIP, Telecom workloads etc)

This helps achieve better performance and resource efficiency in compute-heavy environments.

In short, NFD acts as the hardware awareness layer for Kubernetes.

You can use NFD for any scenario where your application requires special hardware features such as certain GPUs, CPUs, kernel modules, or network devices.

Node Feature Discovery Architecture

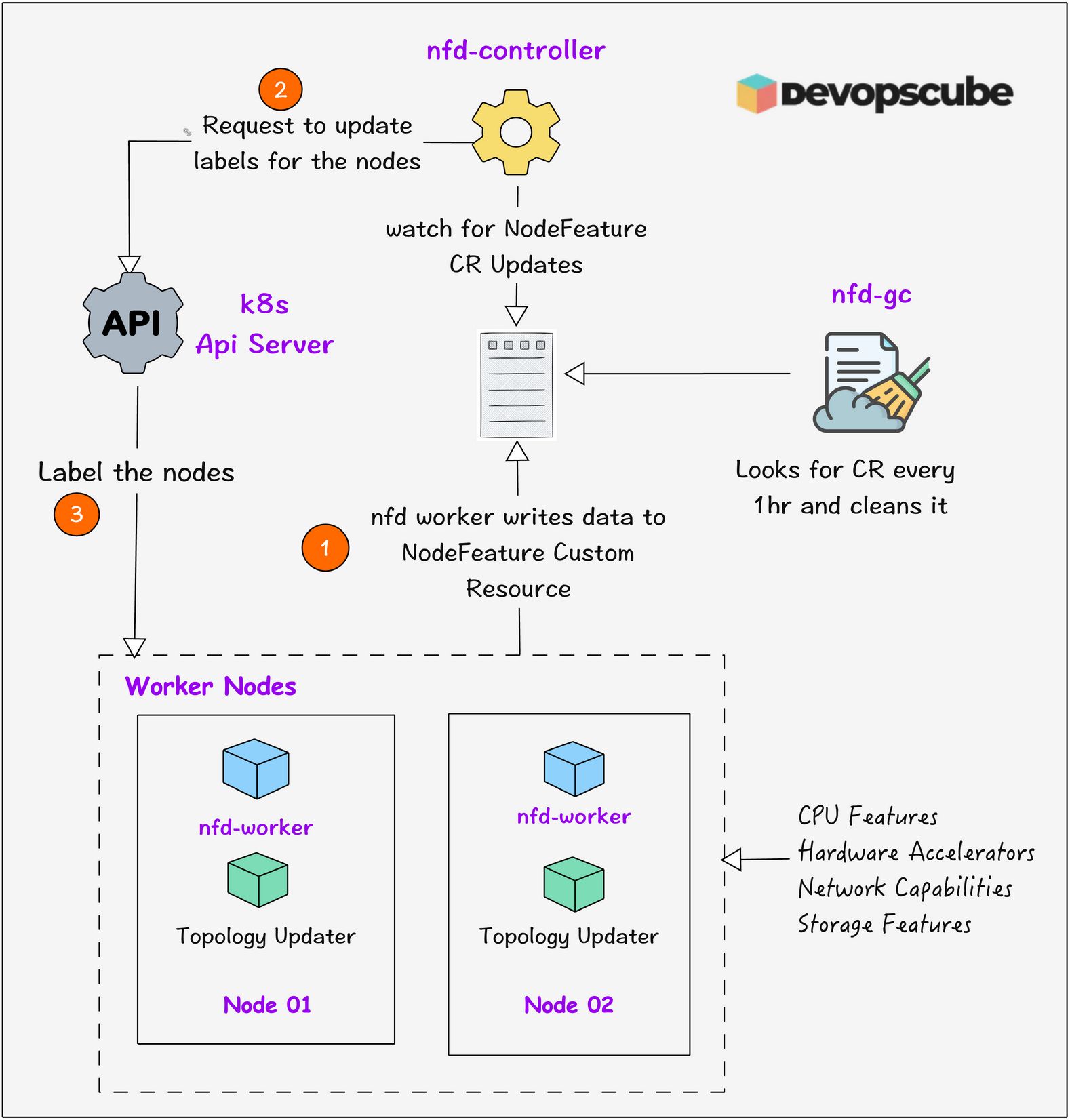

The following image shows the high level architecture of NFD.

Here is how it all works together.

nfd-worker runs as a DaemonSet on each node. It scans multiple sources (CPU, memory, PCI, etc.) and writes raw feature data into NodeFeature custom resources.

At regular intervals (default ~60s), workers update NodeFeature CRs to reflect changes.

nfd-master runs as a Deployment (usually a single replica). It watches these NodeFeature CRs, applies any filtering or rules, and updates the actual Node objects (labels, taints, extended resources) via the Kubernetes API.

nfd-topology-updater (if enabled) also runs as a DaemonSet. It collects NUMA, CPU, memory, and device topology from nodes and publishes NodeResourceTopology objects so that Topology Manager can do NUMA-aware scheduling.

nfd-gc (Garbage Collector) ensures that for any node that has left the cluster, its corresponding NodeFeature or NodeResourceTopology objects are removed. The default garbage collection interval is 1 hour.

NFD Hands ON

Now lets put the learning in to practice.

NFD is an add-on component in Kubernetes, so it needs to be installed separately in your cluster.

Before you install NFD, its a good idea to check the existing labels on your nodes to see what Kubernetes already provides by default.

kubectl get no --show-labelsNow, run the following command to install Node Feature Discovery.

helm install -n node-feature-discovery --create-namespace nfd oci://registry.k8s.io/nfd/charts/node-feature-discovery --version 0.18.1If you want to deploy the topologyUpdater pod, add the flags --set topologyUpdater.createCRDs=true and --set topologyUpdater.enable=true in the above command.

Note: nfd-topology-updater is not useful without a topology-aware scheduler plugin.

helm install -n node-feature-discovery --create-namespace nfd oci://registry.k8s.io/nfd/charts/node-feature-discovery --version 0.18.1 --set topologyUpdater.createCRDs=true --set topologyUpdater.enable=trueRun the following command to check if the pods are created and running.

kubectl get po -n node-feature-discoveryOnce the pods start running, run the following command to check the nodes labels again.

$ kubectl get no --show-labels | grep multi-node-cluster-worker2

.

.

beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,feature.node.kubernetes.io/cpu-cpuid.ADX=true,feature.node.kubernetes.io/cpu-cpuid.AESNI=true,feature.node.kubernetes.io/cpu-cpuid.AVX2=true,feature.node.kubernetes.io/cpu-cpuid.AVX=true,feature.node.kubernetes.io/cpu-cpuid.CMPXCHG8=true,feature.node.kubernetes.io/cpu-cpuid.FMA3=true,feature.node.kubernetes.io/cpu-

.

.You can see that more labels have been added to the node.

Limit/Filter Node Labels

By default NFD gets all available features and updates in the node. This leads to label explosion. Meaning, too many labels are created on your Kubernetes nodes.

Too many labels make node metadata big, which can slow down API responses.

To mitigate this, you can limit or filter what NFD labels.

During the NFD installation, you can control the label filtering using the --set worker.config.core.labelSources flag.

Lets say, you only need to label the available features on cpu, you can use the flag --set worker.config.core.labelSources="{cpu}" to filter the components label you need.

For example,

helm install -n node-feature-discovery --create-namespace nfd oci://registry.k8s.io/nfd/charts/node-feature-discovery --version 0.18.1 --set worker.config.core.labelSources="{cpu}"NFD allows more customizations, please refer the official doc for complex customizations.

Thats a Wrap!

NFD is not required for every Kubernetes setup.

But when you are running AI, ML, or network-heavy workloads, it can come in handy.

It helps Kubernetes understand what your nodes are truly capable of, so pods land exactly where they perform best.

Over to you!

Try out NFD and see if it adds value to your projects.

🚀 Docker Tip

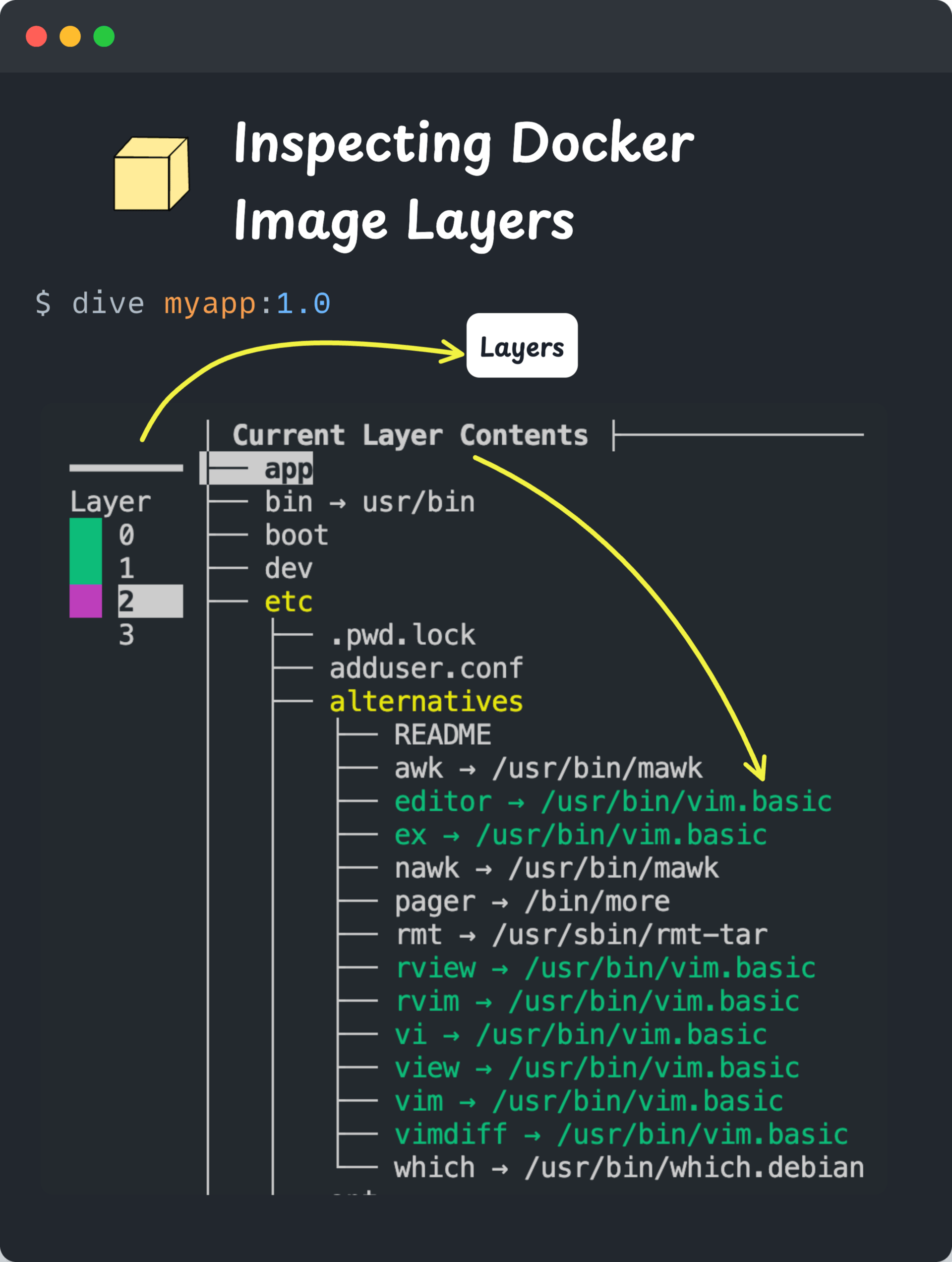

As you may know, every Docker image is made up of layers. Each one added by a line in your Dockerfile. And these layers can tell you why your image is large, slow to build, or hard to cache.

Here is how you can inspect the layers and see what changed in each layer.

You cam make use of the dive utility

It gives a visual view of,

✅ Every layer created

✅ What files were added or changed

✅ How much space each layer uses

It also gives you image efficiency score that tells you how much data is duplicated or wasted across layers in your image.

🤝 3 Ways I Can Help You Level Up

The DevOpsCube learning platform crossed 10,000+ learners!

To celebrate, I am offering 40% discount on my courses using code HALLOWEEN40 at checkout (limited time offer)

Kubernetes & CKA Course & Practice Bundle: Master Kubernetes & prepare for CKA with 80+ exam style practice scenarios.

Kubernetes & CKA Course: Master Kubernetes with real-world examples and achieve CKA Certification with my Comprehensive Course.

CKA Exam Practice Questions & Explanations: 80+ practical, exam-style scenarios designed to help you practice and pass the CKA exam with confidence.