👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

In this edition we will look at how ML applications are different from traditional application development and try to understand the following key concepts with examples.

What is ML

What is a ML algorithm

What is a Model

How apps use a Model

What is inferencing

Size of Models

Note: I am not an ML expert. This content is not meant to teach you ML but rather to help you understand some high-level ML concepts to get started with your MLOps journey.

Lets get started!

Traditional Application

As DevOps engineers, we usually deal with applications that are either UI-based or API-based. For example a websites, its backend APIs etc.

These applications are developed with predefined logic. Meaning, we use some framework (e.g., flask, FastAPI), libraries and code them in such a way that if you input certain data, the application will process it based on the predefined logic and return an expected output.

For example,

Input comes in (login credentials, API requests using JWT, etc.)

Predefined backend logic processes it (authentication checks, business rules)

Expected output is returned (access granted/denied, API responses etc)

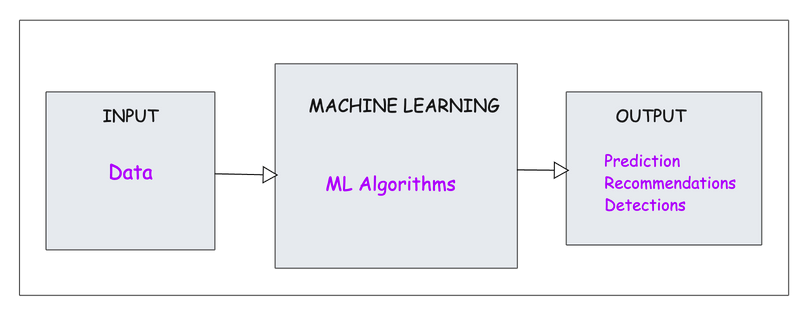

What is Machine Learning?

As the name suggests, Machine Learning is about making a machine learn.

In traditional application development, the logic is predefined, meaning we write code that follows strict rules (e.g., if a username and password match a database entry, access is granted).

In contrast, ML applications don’t rely on predefined logic but rather learn from data.

Following are the few systems that use ML in real world.

Predicting whether an email is spam or not.

Recommending products to users on an e-commerce site based on purchase history, browsing behavior etc.

So how do you make a machine learn?

First we need to understand about Machine Learning algorithms.

Machine Learning Algorithms

You are probably aware of computer science algorithms like QuickSort, MergeSort.

These algorithms are rule-based. Meaning, a programmer defines the logic explicitly. For example, sorting algorithms (e.g., quicksort), search algorithms (e.g., binary search), or simple decision-making rules (e.g., if X > 5, then do Y).

Machine learning (ML) algorithms, on the other hand, learn patterns from data instead of relying on hardcoded rules.

Machine learning algorithms are built using concepts from statistics, calculus, and linear algebra etc. Some well-known ML algorithms are Linear Regression, Random Forest, XGBoost etc

Instead of writing rules like “if CPU is over 90% and latency is more than 200ms, send an alert,” you give an ML algorithm a list of past server logs labelled as “crashed” or “didn’t crash.”

For example, using a Logistic Regression ML algorithm with this server data, you can determine which combination of metrics (CPU, memory, I/O) is most likely to cause a crash.

Over time, it gets smarter at spotting patterns you might not even have thought to script explicitly.

💡 Libraries like scikit-learn, TensorFlow, and PyTorch provide ready-made implementations of these ML algorithms so you don’t have to write them from scratch.

What is a model?

A model is what we get after training a machine learning algorithm on data.

Think of it as the "brain" that ML systems develop through training.

It learns from past experiences (data) and uses that knowledge to make predictions or decisions.

For example, if we train a model using past server logs, it can learn which conditions (like high CPU, memory usage, or latency) lead to a crash.

After training, the model can look at new server data and say, “This might crash, maybe 85% chance.” So we can fix it before it happens. (Just an example)

Using a Model

So, how do we turn this trained algorithm into something we can actually use?

For example, if you write traditional algorithms in Java, the final product would be a WAR or JAR file that can be deployed and executed.

Similarly, in machine learning, once a model is trained, it needs to be saved, deployed, and integrated into an application so it can make real-time predictions.

First, we save the trained model as a file. This could be a .pkl file for Python or a .h5 file for TensorFlow. This file stores everything the model learned during training.

Next, we deploy the model so it can be used in real-world applications.

One common way is to turn it into an API using Flask or FastAPI.

This allows the model to sit online, ready to receive new data and make predictions like answering, "Is this server about to crash?"

The following image shows a basic Flask API that loads a trained model and makes predictions based on incoming data.

In production environments, machine learning models are stored in dedicated model registries (e.v., AWS SageMaker Model Registry) and are accessed via URL endpoints.

Inferencing

Once we deploy the trained model, the next step is inferencing.

Inference is the process of using a trained model to make predictions on new, unseen data.

For example, in our server crash example, predictions are typically expressed as:

1 = The event will happen (e.g., "The server may crash.")

0 = The event will not happen (e.g., "The server is okay.")

This is an example of binary classification, where the model assigns one of two possible labels based on the input data.

Here is the curl request that sends input to this API to predict server crash.

In real-world applications, machine learning models don't just sit idle, waiting for manual input. Instead, they automate predictions by integrating with systems that continuously collect, process, and analyze data in real time.

It might also occur in a batch process, known as batch inferencing.

These steps, from data collection to prediction and action happen within milliseconds to seconds for fast and automated decision-making. For example, recommendation systems in e-commerce.

But batch inferencing might take longer (minutes to hours)

Size of a Model

Traditional Java/other apps typically span KB to a few GB, based on code and dependencies.

A small ML model might be comparable to a Java app, but large-scale models (e.g., LLMs) exceeds most traditional software in size.

For example, the GPT-4 model used by ChatGPT at the backend would be likely more than ~750GB.

Are models only used for predictions?

No, models aren’t only used for prediction.

The example I gave in this guide is just one use case of Supervised Learning. There are other types of ML, which are beyond the scope of this beginner’s guide.

For example, models can also be used for Generation.

Classic examples include GPT, DALL-E, and Stable Diffusion, which create new content (text, images, and audio) rather than just predicting outcomes.