👋 Hi! I’m Bibin Wilson. In each edition, I share practical tips, guides, and the latest trends in DevOps and MLOps to make your day-to-day DevOps tasks more efficient. If someone forwarded this email to you, you can subscribe here to never miss out!

In the last edition, we explored how ML applications differ from traditional app development, covering key concepts like ML algorithms, models, and how they’re used.

I mentioned “training” a model several times. Today, let’s dive into how a model is trained .

I will break it down step-by-step, showing where data engineers, data scientists, ML engineers, and DevOps engineers come in the training process.

Note: I am not an ML expert. This content is not meant to teach you how to train machine learning models. Instead, it aims to help you understand the high-level process and become familiar with the terminology and workflows.

What does “Training” mean in ML?

In simple terms, training is the process of teaching the machine how to make predictions.

It’s similar to how we humans learn.

For example, lets say you are teaching a child to recognize dogs.

You don't write rules like "if it has four legs, a tail, and barks, it's a dog."

Instead, you show the child many examples of dogs, and over time, they learn to recognize dogs of all shapes and sizes.

Similarly, in machine learning, we show the algorithm many examples (data), and it learns the patterns on its own.

The Training Process

To better understand the training process, let's consider an example that a DevOps engineer can relate to: training a model to predict server outages (crash predictor).

Lets look at the whole process.

1. Collecting and Preparing the Data

To train a model, we need historical data, a lot of it. The more data you can collect, the better.

Let’s say you have collected logs from 10,000 servers over the past three years.

The data includes metrics like CPU usage (%), memory usage (%), latency (ms), and more.

2. Cleaning The Data (Data Engineers)

Now, Data Engineers from Data engineering teams are responsible for cleaning the data (e.g., removing duplicates entries, handling missing values etc), and storing them in a central place like an S3 bucket or database.

This is important because, the quality of your data directly impacts how well your model will perform.

3. Labelling the Data (Data Scientists)

Once you have clean data, Data Scientists may work with DevOps Engineers to analyze the historical logs and monitoring data to identify crash events.

This collaboration is important because DevOps Engineers have access to incident management systems, on-call rotation logs, post-mortem documents, and other operational data.

After identifying the events, they develop rules or scripts to assign labels (1 for crash, 0 for no crash) to all the log data. For very large datasets, this labelling process is automated.

These labels help the model understand the patterns that lead to specific outcomes.

4. Feature Engineering (Data scientists & ML Engineers)

What is a feature?

A feature is a meaningful input that a machine learning model uses.

For example, our raw data can already have basic features like: CPU utilization, memory usage, disk I/O rates.

Why do we need feature engineering?

Raw data, like our 10,000 server log entries, might not directly reveal the patterns that matter (e.g., what causes crashes).

For example, CPU usage (%) alone might not tell the full story of a crash.

But if crashes happen when CPU and latency both spike, combining them into a single feature (CPU_latency_combo = CPU * latency) could make that pattern clearer to the model.

So how are features created?

These features are designed based on domain knowledge and understanding of the data.

The following image shows an example of original data and feature engineered data that has a new column.

The number of features depends on the complexity of the system. For example, a spam detection model, you might have 100+ features.

5. Algorithm Selection (Data scientists and ML engineers)

Next step is to choose a ML algorithm.

For our crash predictor, we choose Logistic Regression from scikit-learn.

How?

Data scientists and ML engineers look at the problem (a yes/no crash prediction) and the data (numerical features like CPU, latency, CPU_latency_combo) and knows which one to use.

Logistic Regression is fast, works well for binary outcomes, and doesn’t need tons of compute. Perfect for our use case.

In real-world machine learning projects, selecting the right algorithm involves a structured, evidence-based process rather than simply choosing what's trendy.

6. Splitting the Data (Data Scientists)

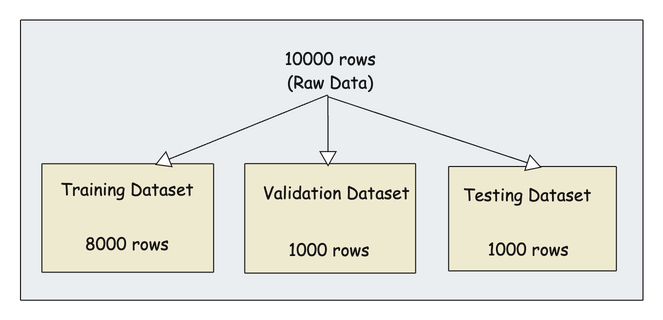

Now, let's say we have 10,000 logs. We will not use all of them for training.

Instead, we typically split the data into:

Training set (70-80%): Used to teach the model.

Validation set (10-15%): Used to tune the model during training. Meaning adjusting the model’s settings called hyperparameters.

Test set (10-15%): Used to evaluate the final model.

So, who is responsible for splitting the data?

Mostly Data Scientists. They might use Python libraries (e.g., scikit-learn's train_test_split) or custom scripts to handle this.

Sometimes Data Engineers. In large-scale or production systems, splitting the data becomes part of a repeatable, automated process managed by Data Engineers.

7. Model Training (Data Scientists & ML Engineers)

When we say training, we are basically running code with data and the chosen algorithm, along with the required logic to predict crashes.

Here is a sample Python-based training code that uses the split data and the selected algorithm. Refer to the image with the code example and the explanation below for better understanding.

Here is how the whole training process work.

The training process begins by loading two datasets: one for training with 8,000 rows and another for testing with 2,000 rows.

From these datasets, it selects specific features like CPU usage, latency, and a combined feature called "CPU_latency_combo". This serve as inputs to the model.

The target variable, labeled "Label," indicates whether a server crash occurred or not. It a known value that model uses to verify the its prediction.

Then a logistic regression model is chosen for this task.

During training, the model uses the training features and their corresponding crash labels to learn patterns.

Once trained, it predicts outcomes on the test dataset, where it uses the same features without seeing the actual labels.

Finally, the model’s predictions are compared against the true labels from the test data to calculate accuracy, showing how often the model's guesses match reality.

So when can we say that the model is trained?

Saying a model is “trained” means crash predictor being able to spot server trouble based on CPU, latency, and CPU_latency_combo.

Logistic Regression keeps trying till it predicts crashes well on test_logs.csv (e.g., 85% accuracy) proof it learned, not memorized.

Not trained if,

Test accuracy is 0.50 (random guessing). It means didn’t learn anything useful. t this point, the data scientist steps back in to improve the model.

They might engineer new features or improve existing ones, try different algorithms, tune hyperparameters, collect more data etc.

The the training process is repeated until the model performs better than random guessing.

Role of DevOps Engineers in Training

All these processes cannot be done manually.

Here is where DevOps Engineers come in.

They write IaC to set up the dev infrastructure, Notebooks, storage (e.g., S3)

Also to deploy ETL pipelines (e.g., Apache Airflow DAGs) to perform data engineering tasks.

ETL stands for Extract, Transform, Load. It is the process to extract data from sources (e.g., logs, databases), transform it by cleaning and formatting, and load it into storage (e.g., S3, databases) for use.

While Data Engineers write the core logic specific to data processing, DevOps Engineers may need to write Python scripts for Airflow DAGs to orchestrate tasks (extract → transform → load).

Also we need to setup CI/CD for ML Pipelines.

Then implement monitoring systems (e.g., Prometheus, Grafana) to track infrastructure usage, data pipeline health, and model training processes.

Also we need to ensure that all processes follow security standards and compliance requirements.

We will learn more about it in the MLOPS lifecycle edition.