👋 Hi! I’m Bibin Wilson. Every Saturday, I publish a deep-dive MLOps edition to help you upskill step by step. If someone forwarded this email to you, you can subscribe here so you never miss an update.

In today’s deep dive edition, we will cover:

The model we will build in this series

Why data collection comes before MLOps

How ETL combines data from many systems

A real Airflow DAG pipeline example

How data shrinks from ~0.8 TB to ~300 MB

Who does what: Data Eng, Data Science, InfoSec, DevOps

In the last MLOps post, I gave you a high-level roadmap on how to upskill in MLOps with your DevOps skillset. If you missed that edition, please read it here.

Starting today, you will receive a new post in this series every Saturday.

We will start from the basics. Building a model from scratch and work our way up to model monitoring, and eventually into LLMs, RAG, LLMOps, Agents, and more. (Primarily on Kubernetes)

Why Are We Building a Model from Scratch?

You might be wondering, if MLOps is about operations, why are we learning how to build a model?

That’s a fair question. Let me explain this with a DevOps example.

A DevOps engineer does not write the Java application. But understanding how the application is built, how it is configured, how dependencies work, how the build process runs makes a DevOps engineer effective in the real world CI/CD setup.

We are going to apply the same thinking to MLOps. You do not need to become a data engineer or a data scientist. But you need to understand the core workflow on how data flows in, how a model gets trained, what the output looks like.

This way, when you are setting up pipelines, deploying models, or debugging failures, you actually know what is going on under the hood.

Disclaimer: I am not an AI/ML developer. The main goal of this series is to understand the infrastructure side of MLOps. The model example used here is only for learning and demo purposes.

Let’s get started.

About the Model

In this series, we will follow the full journey of a machine learning project:

From a local prototype

To a production-grade deployment

Use Case: Employee Attrition Prediction for a large organization with 500,000 employees.

Problem Statement: Employee attrition is a common HR challenge. Organizations want to identify employees who are likely to leave before they actually leave. If you can spot the risk early, you can take action. Maybe it is workload, maybe it is compensation, maybe it is a lack of growth opportunities.

Because we already know the outcome for past employees (stayed vs. left), this is a supervised learning problem. The model learns patterns from historical data and uses those patterns to predict risk for current employees.

No magic. Just pattern recognition at scale.

The first step in building the model is collecting the Historical data.

Why Data Comes First

Before we train any ML model, the first thing we need is data. Everything else like training, tuning and deployment depends on this.

For our Employee Attrition model example, the company has a record of 5 lakh employees for the past 20 years.

Now here is the important part.

The data does not come from a single team or a single system. It is spread across multiple platforms and formats.

Now lets understand Data Sources for our ML Model.

Data Sources: Where the Data Lives

For an employee attrition model, data typically needs to be pulled from the following enterprise systems.

System | What It Contains | Example Data Points |

|---|---|---|

HRMS | Core employee records and lifecycle events | Joining date, role, department, promotions, exit interviews |

Payroll Systems | Compensation and financial data | Salary, bonuses, increments, tax brackets |

LMS Platforms | Learning and development records | Training hours, certifications, skill assessments |

Performance Review Tools | Evaluation and feedback data | Ratings, feedback scores, goal completion rates |

Now pause here and think.

The data from these systems will be in different formats. For example,DOCS, SQL databases, XML exports, CSV dumps, JSON APIs, and more.

This is the core problem we need to solve. We need to unify the data from different systems into a single, clean dataset.

PII Handling & Compliance

Before we even think about training a model, we must talk about compliance..(Read this edition to know more about compliance)

If the data contains employees personal information (PII) like names, email addresses, or phone numbers, we cannot use it as is.

So here is what typically happens (it is mandatory)

PII removal: Personal identifiers (names, emails, etc.) are removed and replaced with anonymized employee IDs.

Sensitive field masking: Fields like salary bands or health information are masked or aggregated to prevent re-identification.

Compliance: The pipeline must ensure GDPR and DPDP Act compliance throughout the process.

💡 Key Insight

This is typically a shared responsibility between the Data Engineering team and the InfoSec/Compliance team. Tools like Microsoft Presidio are used to automatically detect and mask PII in the data.

The Dataset Pipeline (ETL)

When you work with large amounts of data, it is not possible to handle everything manually.

So, once the data sources and compliance rules are clear, the Data Engineering team builds a dataset pipeline. This pipeline is commonly called ETL, which stands for Extract, Transform, and Load.

Here is what each step means.

Extract: Pull raw data from HRMS, payroll APIs, LMS databases, and performance review tools using connectors.

Transform: Clean the data, join it from multiple sources, and convert it into a common format. Data scientists decide which fields are needed and how the data should be aggregated.

Load: Store the final processed dataset as a single file (such as CSV) or load it into a data warehouse (e.g., AWS s3) so it can be used for machine learning later.

The ETL process involves orchestrating multiple tools. Connectors, validators, transformation engines, PII scanners to perform the required data operations. Lets look at the key tools.

Tools Used For the ETL Pipeline

The following are the commonly used tools to build this kind of dataset pipeline.

Apache Airflow (Orchestration): It schedules and coordinates all pipeline tasks as a DAG (Directed Acyclic Graph).

Airbyte (Data Extraction): It has pre-built connectors to pull data from HRMS, payroll, LMS, and other systems. No custom API code needed.

Apache Spark (Data Validation): Defines and runs quality checks. It catches missing values, duplicates, schema mismatches, and data drift.

Microsoft Presidio (PII Detection & Masking): It automatically detects and masks personal information (names, emails, phone numbers) to ensure compliance.

Each tool does one job well, and Airflow coordinates everything.

Example: Airflow DAG Tasks

In Airflow, each step runs as a task in a DAG.

Here is how the pipeline looks as an Airflow DAG (Directed Acyclic Graph). Each task is a step that runs in sequence (Like a CI/CD pipeline).

Each task in Airflow is independent and observable. Meaning if task 3 (validation) fails, you know exactly where the problem is, and Task 4 won’t execute.

💡 For DevOps engineers

Airflow DAGs are Python code. They live in Git, go through code review, and can be deployed via CI/CD just like any other infrastructure-as-code. In production, Airflow itself typically runs on Kubernetes.

Data Size: From Raw to Final CSV

Let’s talk about data size. One of the most common questions is, how big is the data at each stage?

Here is a realistic breakdown for a 5 lakh employee dataset spanning 20 years.

Stage | Size | What Happens |

Raw data (in source systems) | 0.3 – 0.8 TB | Data scattered across HRMS databases, payroll systems, LMS platforms, etc. |

Extracted (intermediate dumps) | ~400 GB | Exported as XML, JSON, or SQL dumps from each system |

Combined & deduplicated | 50 – 150 GB | Duplicate employee records removed across systems, only ML-relevant fields kept |

Final CSV (feature-engineered) | ~300 MB | One row per employee, with consolidated attributes ready for model training |

The final CSV contains one row per employee record and each column contains different attributes of that employee. That is only what the model needs.

💡 Key Insight

The data goes from nearly a terabyte down to ~300 MB. This huge reduction happens because you are going from raw, duplicated, multi-format data to a clean, deduplicated, single-schema dataset with only the features that matter for prediction.

Who Does What?

Building the dataset pipeline is a collaborative effort between multiple teams.

Data Engineering: Builds the ETL pipeline with connectors to HRMS, payroll APIs, and databases. Orchestrates with Airflow, transforms with Spark.

Data Scientists: Define which fields to keep and how to aggregate them. Specify feature engineering logic (e.g., “average last 3 performance ratings” vs. “latest only”).

InfoSec / Compliance: Ensure PII is properly masked, data handling meets GDPR/DPDP Act requirements, and audit trails are maintained.

Platform / DevOps: Provision infrastructure (Airflow servers, Spark clusters, S3 buckets), manage CI/CD for pipeline code, and set up monitoring.

Here is what you would be responsible for as a DevOps/Platform engineer on this pipeline.

Deploy and manage Airflow on Kubernetes (Helm chart + KubernetesExecutor)

Provision and manage Spark clusters (EMR, Dataproc, or Spark on K8s)

Set up S3 buckets with proper IAM policies, encryption, and lifecycle rules

Build CI/CD pipelines to test and deploy DAG code changes

Set up monitoring for Airflow task durations, failure rates, Spark job metrics via Prometheus/Grafana

Manage secrets (database credentials, API keys) using Vault or AWS Secrets Manager

Ensure pipeline logs are centralized (EFK stack or CloudWatch)

My Experience With Airflow:

In one project, even though I was part of the DevOps team, I also wrote Airflow DAGs in Python while managing Airflow itself.

So roles are not always strict. They depend on the project and the team.

That’s a Wrap!

Building the dataset is the very first step in any MLOps workflow, and the most complex one.

In a real-world enterprise setup, you are not just loading a CSV file. You pull data from multiple systems, handle compliance requirements, transform different formats, validate data quality, and finally produce a clean dataset that data scientists can trust.

Here are the key points to remember

Data comes from many systems and formats

PII must be handled before any ML work

ETL pipelines are critical and complex

Raw data shrinks massively after processing

Pipelines must be reproducible and monitored

Doing a fully hands-on implementation of the entire data pipeline we just discussed is very complex. It involves multiple enterprise systems and many teams working together.

The goal of this edition is not to build everything end to end. The goal is to help you see the big picture of how a real-world data pipeline works, and to give you the right context for the hands-on work that comes later.

Think of this edition as connecting the dots.

What’s Coming Next

In the next MLOPS edition, we will move into a fully hands-on walkthrough.

Instead of enterprise systems, we will use a publicly available employee dataset so you can follow along easily on your own machine. This way, you get practical experience without the complexity of real enterprise data systems.

Let’s continue building this step by step.

I have shared two reference links below that walk through Airflow deployment and management.

They will also help you understand system design aspects of running and managing Airflow in production, such as scalability, reliability, and operational best practices.

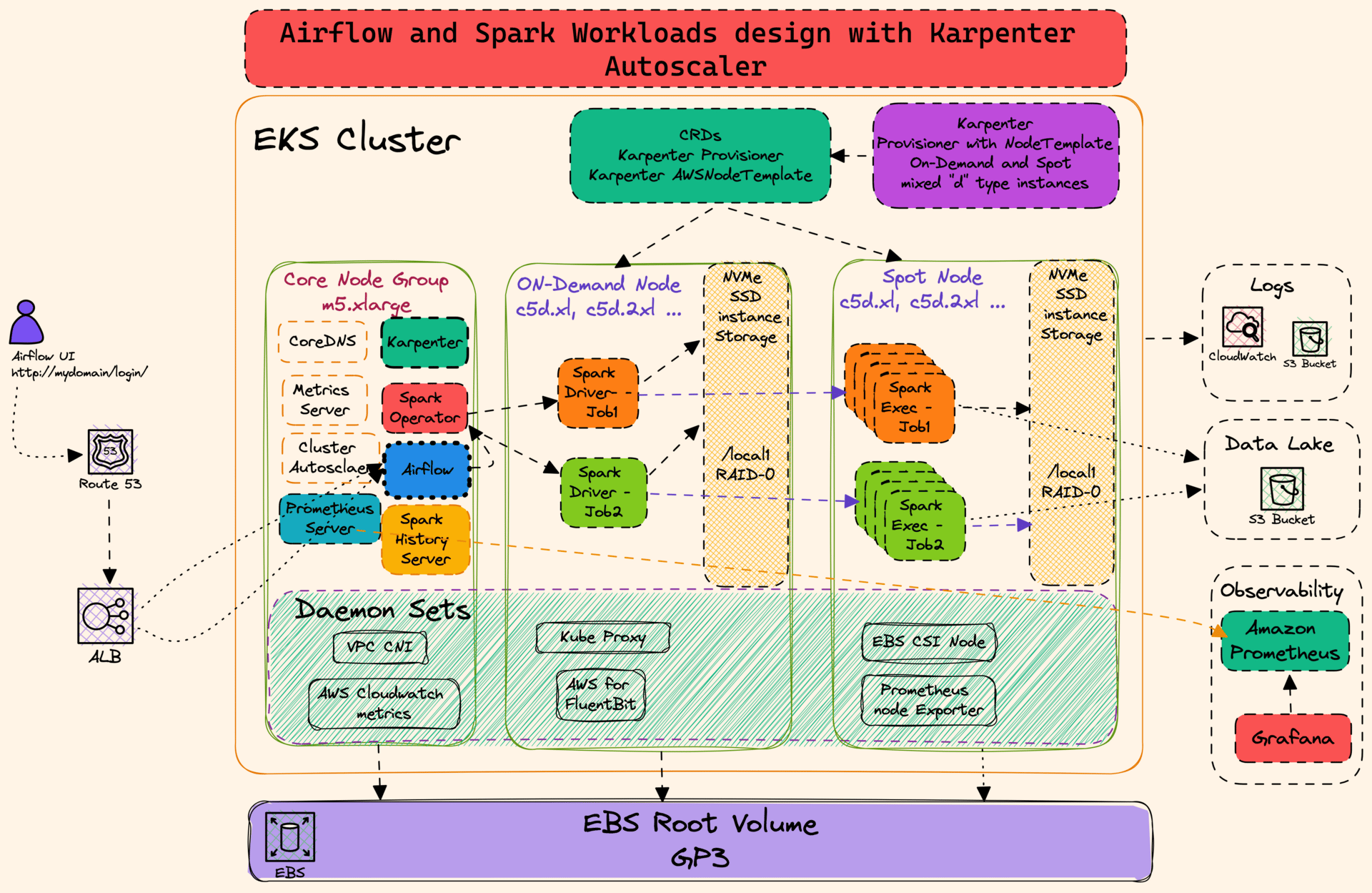

📦 Reference 01: Self-managed Apache Airflow deployment on EKS

This pattern deploys self-managed Apache Airflow deployment on EKS. This blueprint deploys Airflow on Amazon EKS managed node groups and leverages Karpenter to run the workloads.

📦 Reference 02: How does Expedia manage 200 isolated Airflow clusters at scale?

The core of Expedia’s strategy is a multi-tenant platform built on Kubernetes. Instead of running one monolithic Airflow instance, they create potential isolated and self-contained environments for each team.

This isolation allows different teams to run different sets of Python dependencies without conflict. Team A can have completely different libraries than Team B, and their DAGs, connections, and workloads remain separate

🤝 Help a Teammate Learn

Found this useful?

Share the subscription link with a colleague or fellow DevOps engineer.